What are the top 10 trends for the next ten years? Download the report here.

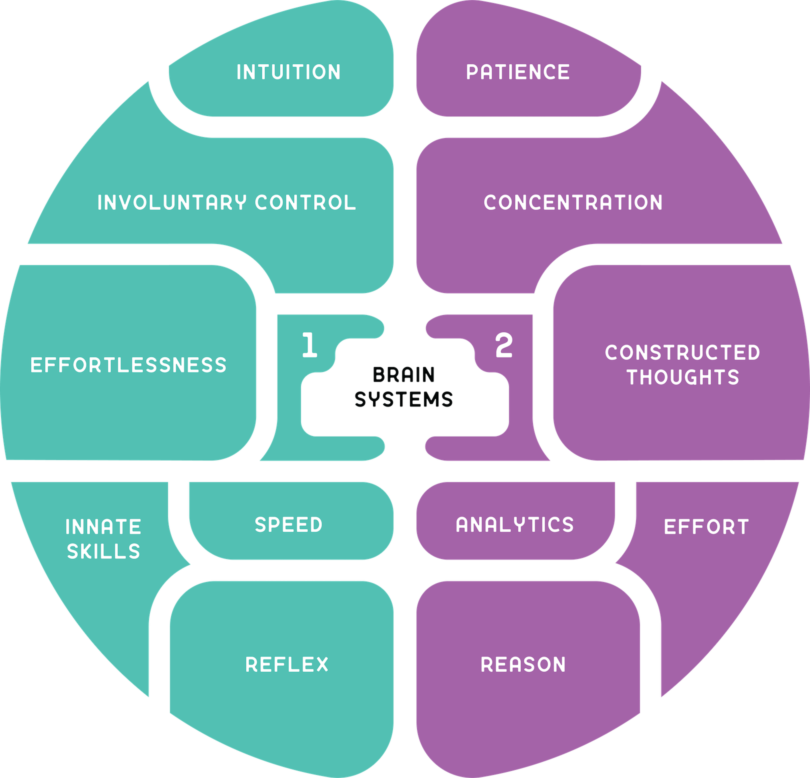

Note: you cannot dissect the brain and find these two thinking systems neatly divided into the different hemispheres.

“Cognitive biases are widely known to skew judgment, and some have particularly pernicious effects on forecasting. They lead people to follow the crowd, to look for information that confirms their views, and to strive to prove just how right they are.”

– Paul J.H. Shoemaker and Philip E. Fetlock, Harvard Business Review, May 2016

Got 6 minutes? Watch this video: "Discussing cognitive bias" with futurist Rebecca Ryan.

Humans have two ways of thinking: fast and slow. In his book Thinking, Fast and Slow, Daniel Kahneman refers to them as System 1 and System 2 (see the visual above).

We tend to rely on “fast thinking” to navigate our world. Fast thinking is on and operating whenever we’re awake. It is a nearly automatic thought process based upon intuition and pattern recognition. Fast thinking enables us to determine where sounds are coming from, helps detect hostility in someone’s voice, allows us to read billboards and do simple math, understand simple sentences, and drive a car on an empty road.

Fast thinking, which Kahneman calls System 1 thinking, is our highly efficient standard mental operating system.

By contrast, System 2 thinking is slow and deliberate. It demands focused attention and a lot of calories. The focused, deliberate thinking of System 2 takes time and energy, which is why it’s reserved for big projects and ignored during simple processing tasks. After a day of slow thinking – like doing strategic planning or driving on the other side of the road in a foreign city – you feel brain dead. Because you are. System 2 thinking is hard work.

System 1 and System 2 thinking easily coexist, but System 1 is usually running the show. System 2 is lying low, available when called upon. All of this works very well most of the time. But, we run into trouble when System 1 creeps in and takes over where System 2 thinking would be more useful.

Watch for cognitive biases

Let’s start with two System 1 shortcuts our brains use in thinking about the future:

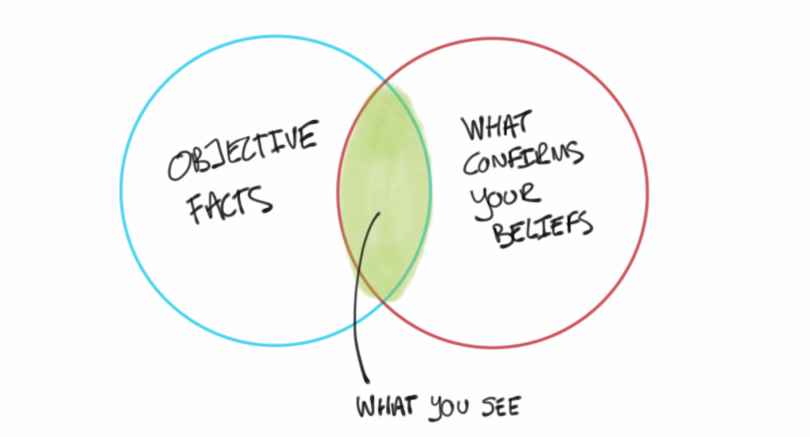

Confirmation bias

We all tend to see what confirms our beliefs rather than challenge them. For example, we tend to tune into different news sources based on our political beliefs. When we over-rely on that sliver of overlap between all the objective facts and the set of facts that happen to confirm our beliefs, then we are struggling with “confirmation bias.”

Image credit: https://fs.blog/2017/05/confir...

Even when we are shown data or information that contradicts our view, we can still interpret it in a way that reinforces our current perspective. We may, for example, react by questioning only their set of assumptions, sources, and research, rather than also our own. You must be wrong because I am right.

In foresight, confirmation bias can manifest as:

Thinking we remain “right” based on what was true yesterday rather than notice new possibilities and adapt to change in a timely manner.

Creating scenarios that fail to be meaningfully different from each other and challenge critical assumptions held today. They sound like variations on today or relatively minor variations of each other.

Oversimplifying forces and their potential impacts as either all “good” or “bad.” Relatedly, failing to consider unintended side-effects.

Having only one idea of what “success” means, looks, and feels like in the future and how to get there.

Overusing a particular perspective to identify, understand, and anticipate changes, challenges, and opportunities. You might know this as having a “hobbyhorse,” “strong opinion,” or the analogy of using a hammer on anything that even vaguely looks like a nail. Contrast these tendencies with the following, which are part of the mindset of a “superforecaster” according to Phillip Tetlock and Dan Gardner: seeing beliefs as not being wedded to any one idea or agenda (pragmatic), hypotheses to be tested (open-minded), and blending diverse views into one’s own (synthesizing).

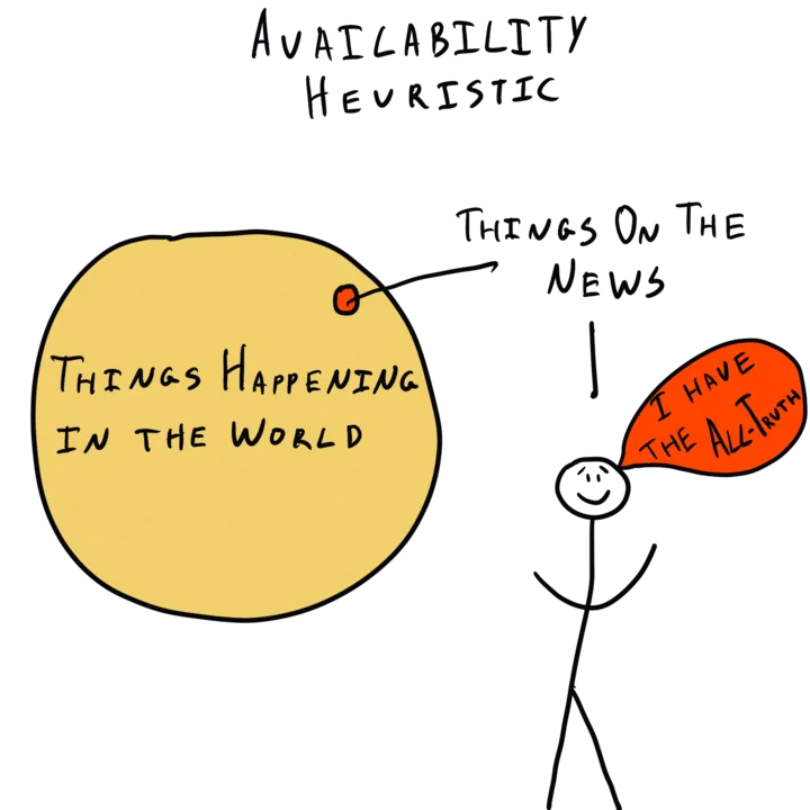

Availability bias

Availability is a mental shortcut that causes us to over-rely on information that’s easily available in our recollection rather than the whole universe of relevant information. If I can recall it, it must be important or more important than alternative ideas that I cannot remember as readily.

For example, in a transcript titled, “Availability Heuristic: Examples and Definition,” psychologist Sarah Lavoie asks: “What is more likely to kill you, your dog or your couch?” Most people answer “my dog” because of availability. We’ve all seen stories of a dog attack. But you’ve probably never seen a news story about someone falling off her couch to her death. Lavoie continues: “In actuality, you are nearly 30 times more likely to die from falling off furniture in your own house than you are to be killed by a dog! This may seem unrealistic, but statistics show this is true.”

Image credit: https://thedecisionlab.com/bia...

Here are some examples of how availability bias can manifest in foresight:

Thinking we already know all the relevant and most critical facts about the future to guide decision-making.

Considering trends and research only in the topics that are commonly discussed in an organization or the news.

Rating a challenging future as more probable (and therefore possibly taking it more seriously) than other types of plausible scenarios, because of one’s own negative experiences or after consuming information that focuses more on what is going on wrong and less on what is going right.

Perceiving a potential opportunity as more or less risky based on a recent experience or discussion.

Imagining a visionary or surprisingly successful future for 2040 that sounds and feels more like something that can plausibly come true in the next 3-5 years. For example, it was easier (at least initially) to imagine a smaller, sleeker phone or a healthier, faster horse than it was to imagine a smartphone or automobile.

And more

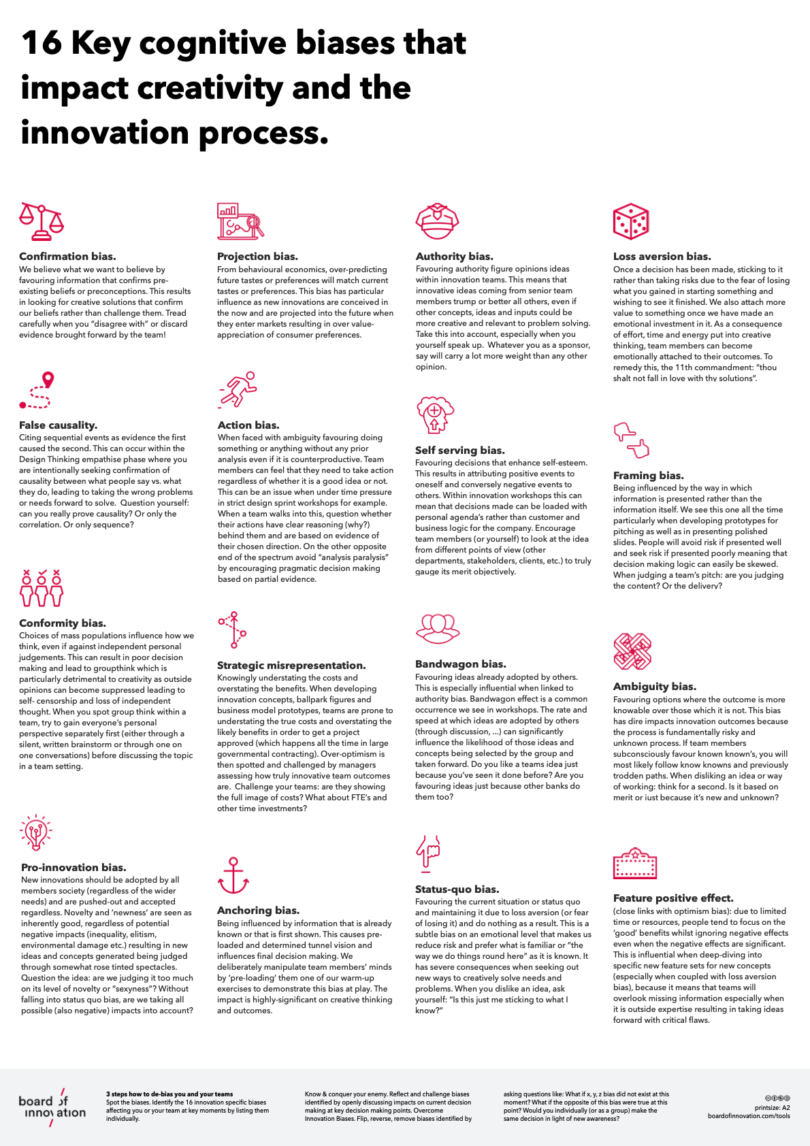

Confirmation bias and availability bias are only two examples of System 1 thinking shortcuts. Below is a poster of additional ones that can shape our ideas, hopes, visions, and plans for the future. Where and how have you experienced any of these in yourself or others?

Poster credit: https://www.boardofinnovation....

Hacks to overcome cognitive bias

You: Okay, I get it. If I don’t pay attention to my thought process, I will not be a good futurist. But what can I do about it?

Glad you asked! Here are some ideas for how to prevent System 1 from creeping in and taking over, and instead use more System 2 thinking to imagine, explore, and create futures:

Give it more time to think it through more slowly. Don’t rush ideas, decisions, or plans out the door. For example, use the Overton Window framework to notice and reflect on what is changing and its implications for plans and actions. Push and expand your initial set of ideas of what surprising success might look, feel, and sound like. Or, when you have no idea what your future will be, you can use this 6-step process to get clear and get ready.

Invite more diverse perspectives into your thinking and decision-making process. For example, review and diversify your sources of information, leverage Red Teaming, and consider a signals & sensemaking panel.

On a larger scale, the strategic foresight process is essentially a hack, a System 2 approach to “slow, think” the future and leverage diverse perspectives.

Hungry for more? Here is a 38-minute video on cognitive bias from our Futures Fridays series.

If you enjoyed this post, please subscribe to our newsletter.

Rebecca Ryan, APF

Rebecca Ryan captains the ship. Trained as a futurist and an economist, Rebecca helps clients see what's coming - as a keynote speaker, a Futures Lab facilitator, an author of books, blogs and articles, a client advisor, and the founder of Futurist Camp. Check out her blog or watch her Q&A on how NGC helps organizations prepare for the future using Strategic Foresight. Contact Lisa Loniello for more information.

Yasemin Arikan

Yasemin (Yas) Arikan operates the research vessel. She is a futurist who uses foresight and social science methods to help clients understand how the future could be different from today and then use these insights to inform strategy and vision. Her work includes developing scenarios on the futures of public health, health care, society and technology for associations, foundations, government, and business. Bonus: Yas can help you take your gift wrapping game to the next level. And she can talk with you about it in English, German, or Turkish. Watch Yas' Q&A on how NGC helps organizations prepare for the future using Strategic Foresight.

Yasemin Arikan Promoted to Director of Futures Research

NEXT Generation Consulting (NGC) announced the promotion of Yasemin Arikan to Director of Futures Research. Arikan will lead the company’s efforts to...

Is Your Housing Market Ready for Your Future?

One of the biggest problems facing many cities and towns is inadequate housing. This problem is most acute for seniors, veterans with disabilities, and low-income groups ...

Three Things Martha Stewart Gets Right About Return to Office (RTO)

The original influencer and the person who invented the "Home" retail category, Martha Stewart, became the latest CEO to tell employees to get back to the office five day...